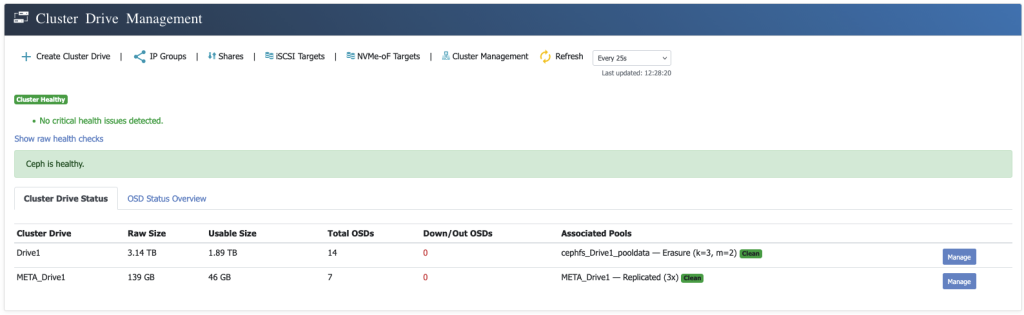

eEVOS integrates Ceph as its scale-out, fault-tolerant storage backend — offering unmatched flexibility, performance, and reliability for enterprise virtualization environments. Ceph in eEVOS is more than just shared storage; it’s a distributed system designed for resilience, scalability, and fine-tuned performance control.

Clusters start with as few as 3 hosts and scale linearly up to 32 hosts, enabling predictable growth and capacity expansion without service interruption.

Advanced Storage Architecture

Ceph in eEVOS allows you to build storage pools tailored to specific workloads, with full support for:

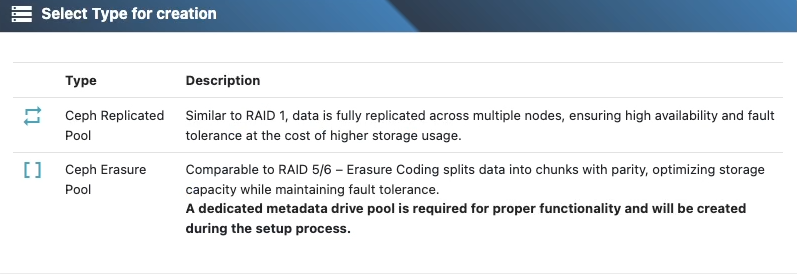

Replication

- Classic data protection via copy-based redundancy

- Data is replicated (e.g., 3x) across multiple hosts

- Ensures high availability and fault tolerance

- Simplifies recovery in the event of host or disk failure

Erasure Coding

- Space-efficient redundancy method that breaks data into chunks and parity blocks (e.g., 4+2, 8+3)

- Offers up to 50% or more capacity savings compared to replication

- Suitable for archival or less latency-sensitive workloads

- Fully supported within eEVOS storage pools

Dedicated DB/WAL Devices

- Support for placing RocksDB database (DB) and Write-Ahead Log (WAL) on separate SSD/NVMe devices

- Boosts performance for HDD-based OSDs by accelerating metadata and write operations

- Ideal for hybrid setups (HDD for capacity, SSD for DB/WAL)

Metadata Placement Control

- Separate VM metadata (image structure, snapshots, etc.) from data payload

- Store metadata on faster disks to speed up VM boot times, cloning, and snapshot operations

Flexible Pool Design

eEVOS allows you to create multiple storage pools with independent policies, each optimized for different workloads:

- High-performance SSD pools with replication for latency-sensitive applications

- HDD-based pools with erasure coding for backup or archival data

- Mixed pools with tiering strategies based on storage class

Each pool can be assigned to VMs or services individually, giving you total control over performance and fault tolerance levels.

High Availability and Data Integrity

- Self-Healing – Ceph automatically detects failures and rebuilds lost data from replicas or erasure fragments

- No Single Point of Failure – Data is distributed across multiple hosts and disks

- Automatic Rebalancing – When capacity changes (e.g., adding a host), Ceph rebalances data in the background without disrupting service

Seamless Integration with eEVOS

- Fully managed via the eEVOS web interface – no CLI or deep Ceph knowledge required

- Monitored with health dashboards and usage metrics

- Easily assign pools to VMs, backup jobs, or containers

Summary of Key Benefits

- Scale from 3 to 32 hosts per cluster

- Choose between replication or erasure coding

- Optimize performance with DB/WAL separation and metadata tuning

- Ideal for mixed workloads: virtualization, backups, object storage

- Native integration with eEVOS HA and migration features

- Open-source, vendor-neutral alternative to proprietary SDS platforms like VMware vSAN